The goal of this Colab notebook is to capture the distribution of Steam banners and sample with a "lightweight GAN".

- Acquire a dataset, e.g. one of the versions proposed with

Steam-OneFace, - Run

Steam_Lightweight_GAN.ipynbto train a "lightweight GAN" from scratch,

The dataset consists of Steam-OneFace-small, which is a small version of Steam-OneFace.

It features 993 Steam banners, with RGB channels and resized from 300x450 to 256x256 resolution.

The banners were selected to contain exactly one face, based on the two face detection modules face_alignment and retinaface.

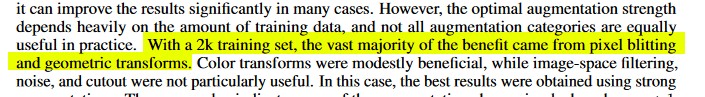

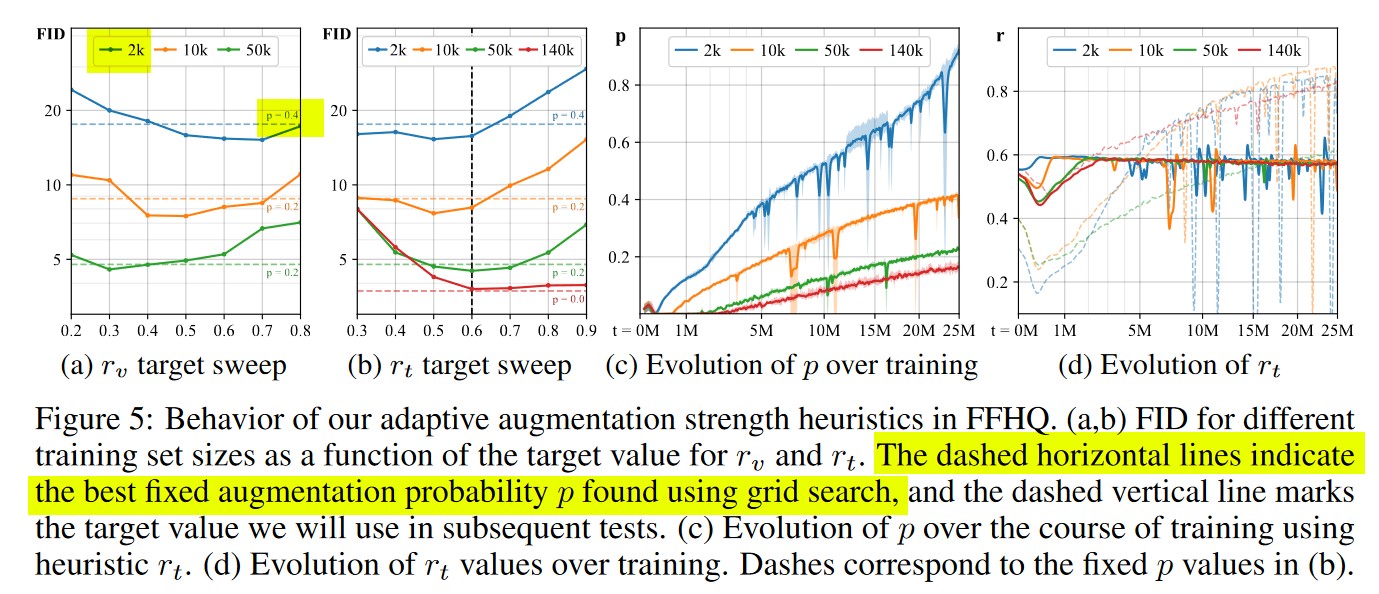

Following the remark for datasets with ~ 2k images in the paper for StyleGAN2-ADA:

- I have settled for a fixed augmentation probability equal to 0.4,

- the augmentation was initially constrained to

translation.

After 27k iterations, the discriminator (D) loss was much lower than the generator (G) loss, and close to zero, so the color augmentation was added.

Caveat: in the StyleGAN2-ADA paper, it is mentioned that "color" was only slightly beneficial.

After 54k iterations, for the same reason, the cutout augmentation was added.

Caveat: in the StyleGAN2-ADA paper, it is mentioned that "cutout" was detrimental to the results.

With Tesla T4 (with 16 GB VRAM), the mini-batch size could be set to 64 images. Because the mini-batch size is greater than 32, gradient accumulation is not needed.

Automatic Mixed Precision is toggled ON.

Depending on the GPU provided by Google Colab, the total training may vary wildly.

With Tesla T4, an iteration requires 1.6 second. For 150k iterations, the total training time is expected to be slightly less than 70 hours.

With Tesla K80, an iteration will require much longer time. Moreover, you would have to decrease the mini-batch size to 32, and maybe rely on gradient accumulation.

During training, checkpoints of the model are saved every thousand epochs, and shared on Google Drive.

TODO

- DCGAN:

- StyleGAN:

- StyleGAN2:

- StyleGAN2-ADA:

- "Lightweight-GAN":